Advanced Patterns and Production Deployment

Advanced Patterns and Production Deployment

As your FastMCP applications grow from simple development tools to production-ready services, you'll need to consider advanced patterns, scalability, security, and deployment strategies. This section covers enterprise-grade FastMCP development and deployment.

Advanced Server Patterns

Server Composition and Modularity

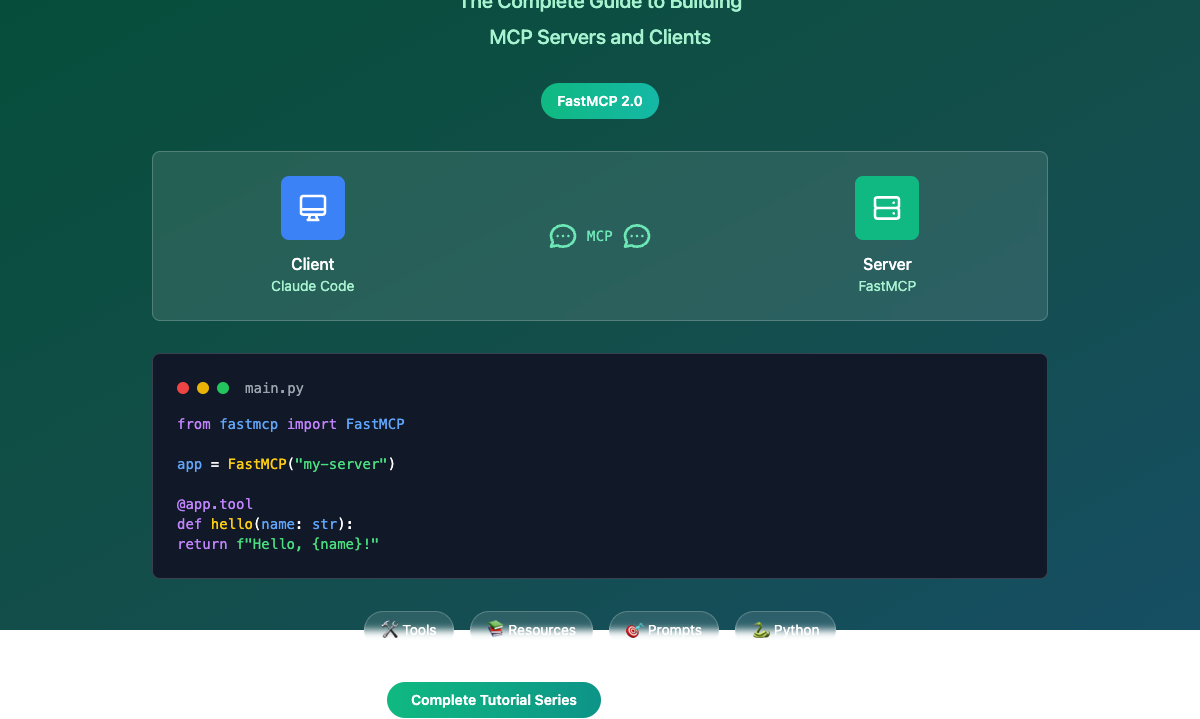

FastMCP 2.0 supports sophisticated server composition patterns that enable modular, maintainable applications:

# auth_server.py

from fastmcp import FastMCP

import jwt

import bcrypt

auth_server = FastMCP("Authentication Service")

@auth_server.tool

def authenticate_user(username: str, password: str) -> dict:

"""Authenticate a user and return a JWT token."""

# Implementation for user authentication

if verify_credentials(username, password):

token = jwt.encode({"user": username}, "secret", algorithm="HS256")

return {"token": token, "user": username, "authenticated": True}

return {"authenticated": False, "error": "Invalid credentials"}

# data_server.py

from fastmcp import FastMCP

import pandas as pd

data_server = FastMCP("Data Processing Service")

@data_server.tool

def process_dataset(data_path: str, operation: str) -> dict:

"""Process a dataset with specified operations."""

df = pd.read_csv(data_path)

operations = {

"summary": lambda df: df.describe().to_dict(),

"count": lambda df: {"rows": len(df), "columns": len(df.columns)},

"columns": lambda df: df.columns.tolist()

}

if operation not in operations:

raise ValueError(f"Unknown operation: {operation}")

return operations[operation](df)

# main_server.py

from fastmcp import FastMCP

from auth_server import auth_server

from data_server import data_server

# Create main server and compose sub-servers

main_server = FastMCP(

name="Enterprise Application",

instructions="Comprehensive enterprise application with authentication and data processing"

)

# Mount sub-servers with prefixes

main_server.mount(auth_server, prefix="auth")

main_server.mount(data_server, prefix="data")

# Add main server tools

@main_server.tool

def health_check() -> dict:

"""Check the health of all services."""

return {

"status": "healthy",

"services": ["auth", "data", "main"],

"timestamp": datetime.now().isoformat()

}

if __name__ == "__main__":

main_server.run(transport="http", host="0.0.0.0", port=8000)

Proxy Servers for API Integration

Create proxy servers to bridge existing APIs into the MCP ecosystem:

# api_proxy.py

from fastmcp import FastMCP, Client

import asyncio

# Create a proxy for an existing MCP server

async def create_api_proxy():

# Connect to remote MCP server

remote_client = Client("https://api.example.com/mcp/sse")

# Create proxy server

proxy_server = FastMCP.as_proxy(

remote_client,

name="API Proxy Server",

instructions="Proxy for remote API services"

)

# Add custom middleware or tools to the proxy

@proxy_server.tool

def local_cache_status() -> dict:

"""Get local cache status."""

return {"cache_enabled": True, "cache_size": "50MB"}

return proxy_server

# Usage

if __name__ == "__main__":

proxy = asyncio.run(create_api_proxy())

proxy.run(transport="http", port=9000)

Dynamic Tool Generation

Generate tools dynamically from configurations or OpenAPI specifications:

# dynamic_tools.py

from fastmcp import FastMCP

import yaml

import requests

mcp = FastMCP("Dynamic API Server")

def create_api_tool(endpoint_config):

"""Dynamically create a tool from API endpoint configuration."""

def api_tool(**kwargs):

response = requests.request(

method=endpoint_config["method"],

url=endpoint_config["url"],

**kwargs

)

return response.json()

# Set function metadata

api_tool.__name__ = endpoint_config["name"]

api_tool.__doc__ = endpoint_config["description"]

return api_tool

# Load API configuration

with open("api_config.yaml", "r") as f:

api_config = yaml.safe_load(f)

# Generate tools dynamically

for endpoint in api_config["endpoints"]:

tool_func = create_api_tool(endpoint)

mcp.tool(tool_func)

# Alternative: Generate from OpenAPI spec

def load_from_openapi(spec_url: str):

"""Load tools from OpenAPI specification."""

# FastMCP has built-in OpenAPI integration

api_server = FastMCP.from_openapi(spec_url)

return api_server

# Usage

# openapi_server = load_from_openapi("https://api.example.com/openapi.json")

# mcp.mount(openapi_server, prefix="api")

Production-Ready Authentication

JWT-Based Authentication

# secure_server.py

from fastmcp import FastMCP

from fastmcp.auth import JWTAuthProvider

import os

# Configure JWT authentication

auth_provider = JWTAuthProvider(

secret_key=os.getenv("JWT_SECRET_KEY"),

algorithm="HS256",

token_header="Authorization"

)

# Create authenticated server

mcp = FastMCP(

"Secure Production Server",

auth_provider=auth_provider

)

@mcp.tool(require_auth=True)

def get_sensitive_data(user_context) -> dict:

"""Get sensitive data - requires authentication."""

return {

"user_id": user_context.user_id,

"data": "sensitive information",

"access_level": user_context.permissions

}

@mcp.tool # Public tool, no auth required

def get_public_info() -> dict:

"""Get public information."""

return {"status": "public", "version": "1.0.0"}

API Key Authentication

# api_key_server.py

from fastmcp import FastMCP

from fastmcp.auth import APIKeyAuthProvider

# Configure API key authentication

auth_provider = APIKeyAuthProvider(

api_keys={

"key123": {"name": "Client A", "permissions": ["read", "write"]},

"key456": {"name": "Client B", "permissions": ["read"]}

},

header_name="X-API-Key"

)

mcp = FastMCP("API Key Protected Server", auth_provider=auth_provider)

@mcp.tool(require_permissions=["write"])

def write_data(data: dict, auth_context) -> dict:

"""Write data - requires write permission."""

# Implementation

return {"written": True, "client": auth_context.client_name}

Deployment Strategies

Docker Deployment

# Dockerfile

FROM python:3.11-slim

WORKDIR /app

# Install dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy application

COPY . .

# Create non-root user

RUN useradd -m -u 1000 mcpuser && chown -R mcpuser:mcpuser /app

USER mcpuser

# Health check

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD curl -f http://localhost:8000/health || exit 1

# Run server

EXPOSE 8000

CMD ["python", "server.py"]

# docker-compose.yml

version: '3.8'

services:

fastmcp-server:

build: .

ports:

- "8000:8000"

environment:

- FASTMCP_LOG_LEVEL=INFO

- JWT_SECRET_KEY=${JWT_SECRET_KEY}

- DATABASE_URL=${DATABASE_URL}

volumes:

- ./logs:/app/logs

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 30s

timeout: 10s

retries: 3

redis:

image: redis:7-alpine

ports:

- "6379:6379"

volumes:

- redis_data:/data

restart: unless-stopped

nginx:

image: nginx:alpine

ports:

- "80:80"

- "443:443"

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf

- ./ssl:/etc/nginx/ssl

depends_on:

- fastmcp-server

restart: unless-stopped

volumes:

redis_data:

Kubernetes Deployment

# k8s-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: fastmcp-server

labels:

app: fastmcp-server

spec:

replicas: 3

selector:

matchLabels:

app: fastmcp-server

template:

metadata:

labels:

app: fastmcp-server

spec:

containers:

- name: fastmcp-server

image: your-registry/fastmcp-server:latest

ports:

- containerPort: 8000

env:

- name: FASTMCP_LOG_LEVEL

value: "INFO"

- name: JWT_SECRET_KEY

valueFrom:

secretKeyRef:

name: fastmcp-secrets

key: jwt-secret

resources:

requests:

memory: "256Mi"

cpu: "250m"

limits:

memory: "512Mi"

cpu: "500m"

livenessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /ready

port: 8000

initialDelaySeconds: 5

periodSeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: fastmcp-service

spec:

selector:

app: fastmcp-server

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: fastmcp-ingress

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: letsencrypt-prod

spec:

tls:

- hosts:

- api.yourdomain.com

secretName: fastmcp-tls

rules:

- host: api.yourdomain.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: fastmcp-service

port:

number: 80

Monitoring and Observability

Logging and Metrics

# monitored_server.py

from fastmcp import FastMCP, Context

import logging

import time

from prometheus_client import Counter, Histogram, start_http_server

# Set up metrics

TOOL_CALLS = Counter('mcp_tool_calls_total', 'Total tool calls', ['tool_name', 'status'])

TOOL_DURATION = Histogram('mcp_tool_duration_seconds', 'Tool execution time', ['tool_name'])

# Configure logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler('fastmcp.log'),

logging.StreamHandler()

]

)

logger = logging.getLogger(__name__)

mcp = FastMCP("Monitored Server")

def monitor_tool(func):

"""Decorator to add monitoring to tools."""

def wrapper(*args, **kwargs):

start_time = time.time()

tool_name = func.__name__

try:

logger.info(f"Starting tool: {tool_name}")

result = func(*args, **kwargs)

TOOL_CALLS.labels(tool_name=tool_name, status='success').inc()

logger.info(f"Tool {tool_name} completed successfully")

return result

except Exception as e:

TOOL_CALLS.labels(tool_name=tool_name, status='error').inc()

logger.error(f"Tool {tool_name} failed: {str(e)}")

raise

finally:

duration = time.time() - start_time

TOOL_DURATION.labels(tool_name=tool_name).observe(duration)

logger.info(f"Tool {tool_name} took {duration:.2f}s")

return wrapper

@mcp.tool

@monitor_tool

def process_data(data: list) -> dict:

"""Process data with monitoring."""

# Processing logic

return {"processed": len(data), "status": "success"}

# Start Prometheus metrics server

start_http_server(9090)

if __name__ == "__main__":

mcp.run(transport="http", port=8000)

Health Checks and Circuit Breakers

# resilient_server.py

from fastmcp import FastMCP, Context

import asyncio

from circuit_breaker import CircuitBreaker

import aioredis

mcp = FastMCP("Resilient Server")

# Circuit breaker for external API calls

api_circuit_breaker = CircuitBreaker(

failure_threshold=5,

recovery_timeout=30,

expected_exception=Exception

)

@mcp.tool

async def call_external_api(endpoint: str, ctx: Context) -> dict:

"""Call external API with circuit breaker protection."""

@api_circuit_breaker

async def make_api_call():

# Actual API call implementation

async with aiohttp.ClientSession() as session:

async with session.get(endpoint) as response:

return await response.json()

try:

return await make_api_call()

except Exception as e:

await ctx.error(f"API call failed: {str(e)}")

return {"error": "Service temporarily unavailable"}

@mcp.resource("health://status")

async def health_check() -> dict:

"""Comprehensive health check."""

health_status = {

"status": "healthy",

"timestamp": datetime.now().isoformat(),

"checks": {}

}

# Check database connection

try:

# Database health check logic

health_status["checks"]["database"] = "healthy"

except Exception:

health_status["checks"]["database"] = "unhealthy"

health_status["status"] = "unhealthy"

# Check Redis connection

try:

redis = aioredis.from_url("redis://localhost")

await redis.ping()

health_status["checks"]["redis"] = "healthy"

except Exception:

health_status["checks"]["redis"] = "unhealthy"

health_status["status"] = "degraded"

return health_status

Security Best Practices

Input Validation and Sanitization

# secure_tools.py

from fastmcp import FastMCP

from pydantic import BaseModel, validator

import re

import html

mcp = FastMCP("Secure Server")

class UserInput(BaseModel):

username: str

email: str

age: int

@validator('username')

def validate_username(cls, v):

if not re.match(r'^[a-zA-Z0-9_]+$', v):

raise ValueError('Username must contain only letters, numbers, and underscores')

if len(v) < 3 or len(v) > 20:

raise ValueError('Username must be between 3 and 20 characters')

return v

@validator('email')

def validate_email(cls, v):

email_pattern = r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

if not re.match(email_pattern, v):

raise ValueError('Invalid email format')

return v

@validator('age')

def validate_age(cls, v):

if v < 0 or v > 150:

raise ValueError('Age must be between 0 and 150')

return v

@mcp.tool

def create_user(user_data: UserInput) -> dict:

"""Create a user with validated input."""

# Input is automatically validated by Pydantic

return {

"user_id": generate_user_id(),

"username": user_data.username,

"email": user_data.email,

"status": "created"

}

@mcp.tool

def sanitize_html_content(content: str) -> str:

"""Sanitize HTML content to prevent XSS."""

# Basic HTML sanitization

sanitized = html.escape(content)

return sanitized

Rate Limiting and Throttling

# rate_limited_server.py

from fastmcp import FastMCP

from fastmcp.middleware import RateLimitMiddleware

import asyncio

# Configure rate limiting

rate_limiter = RateLimitMiddleware(

requests_per_minute=60,

burst_size=10,

storage_backend="redis", # or "memory"

redis_url="redis://localhost:6379"

)

mcp = FastMCP("Rate Limited Server", middleware=[rate_limiter])

@mcp.tool

def expensive_operation(data: str) -> dict:

"""An expensive operation that should be rate limited."""

# Simulate expensive processing

time.sleep(2)

return {"processed": True, "data_length": len(data)}

Performance Optimization

Async Operations and Connection Pooling

# optimized_server.py

from fastmcp import FastMCP, Context

import asyncio

import aiohttp

import asyncpg

from contextlib import asynccontextmanager

class DatabasePool:

def __init__(self):

self.pool = None

async def create_pool(self):

self.pool = await asyncpg.create_pool(

"postgresql://user:pass@localhost/db",

min_size=10,

max_size=20

)

async def close_pool(self):

if self.pool:

await self.pool.close()

db_pool = DatabasePool()

@asynccontextmanager

async def lifespan():

# Startup

await db_pool.create_pool()

yield

# Shutdown

await db_pool.close_pool()

mcp = FastMCP("Optimized Server", lifespan=lifespan)

@mcp.tool

async def query_database(query: str) -> dict:

"""Execute database query using connection pool."""

async with db_pool.pool.acquire() as connection:

result = await connection.fetch(query)

return {"rows": [dict(row) for row in result]}

@mcp.tool

async def batch_http_requests(urls: list[str], ctx: Context) -> list[dict]:

"""Make multiple HTTP requests concurrently."""

await ctx.info(f"Making {len(urls)} concurrent requests...")

async with aiohttp.ClientSession() as session:

tasks = []

for url in urls:

task = session.get(url)

tasks.append(task)

responses = await asyncio.gather(*tasks, return_exceptions=True)

results = []

for i, response in enumerate(responses):

if isinstance(response, Exception):

results.append({"url": urls[i], "error": str(response)})

else:

results.append({

"url": urls[i],

"status": response.status,

"data": await response.text()

})

return results

Conclusion

FastMCP 2.0 provides a comprehensive platform for building production-ready MCP servers that can scale from simple development tools to enterprise-grade applications. Key takeaways:

Development Best Practices

- Use modular server composition for maintainable code

- Implement comprehensive error handling and validation

- Add proper logging and monitoring from the start

- Test your tools and resources thoroughly

Production Readiness

- Implement proper authentication and authorization

- Use environment-based configuration

- Add health checks and circuit breakers

- Monitor performance and resource usage

Deployment Strategies

- Containerize your applications for consistency

- Use orchestration platforms like Kubernetes for scale

- Implement proper CI/CD pipelines

- Plan for high availability and disaster recovery

Security Considerations

- Validate and sanitize all inputs

- Implement rate limiting and throttling

- Use HTTPS and proper authentication

- Regular security audits and updates

FastMCP makes it possible to build sophisticated AI-powered applications that integrate seamlessly with modern development workflows and production infrastructure. Whether you're building development tools, data processing services, or complex enterprise applications, FastMCP provides the foundation you need to succeed.

Happy building with FastMCP! 🚀